From GPT-4 to AGI: Counting the OOMs

AGI by 2027 is strikingly believable. GPT-2 to GPT-4 took us from ~preschooler to ~neat high-schooler skills in 4 years. Tracing trendlines in compute (~0.5 orders of magnitude or OOMs/year), algorithmic efficiencies (~0.5 OOMs/year), and “unhobbling” gains (from chatbot to agent), we also can merely restful quiz yet any other preschooler-to-high-schooler-sized qualitative soar by 2027.

Peek. The fashions, they right want to learn. Or no longer it may perhaps well well perhaps well be wanted to note this. The fashions, they right want to learn.

Ilya Sutskever (circa 2015, by process of Dario Amodei)

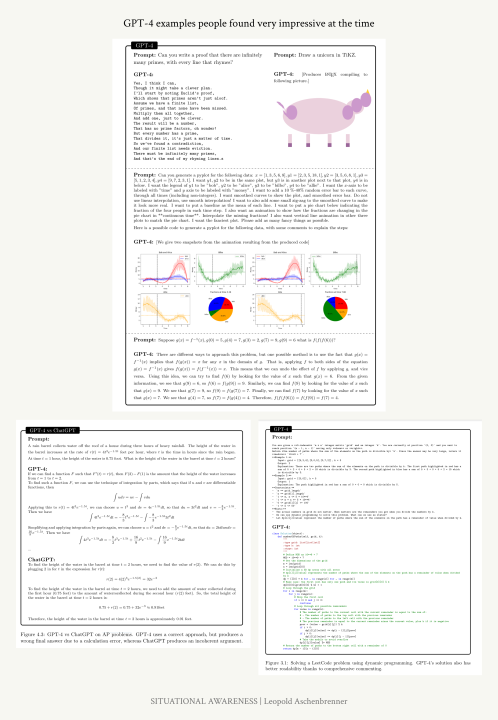

GPT-4’s capabilities got right here as a shock to many: an AI system that would write code and essays, may perhaps well perhaps well motive thru hard math considerations, and ace faculty exams. About a years ago, most thought these were impenetrable partitions.

Nevertheless GPT-4 changed into merely the continuation of a decade of breakneck growth in deep learning. A decade earlier, fashions may perhaps well perhaps well barely title straightforward photos of cats and dogs; four years earlier, GPT-2 may perhaps well perhaps well barely string collectively semi-believable sentences. Now we’re fast saturating the total benchmarks we can arrive up with. And yet this dramatic growth has merely been the of consistent trends in scaling up deep learning.

There were other folks that agree with viewed this for some distance longer. They were scoffed at, nonetheless all they did changed into have faith the trendlines. The trendlines are intense, and as well they were right. The fashions, they right want to learn; you scale them up, and as well they learn more.

I plot the next convey: it’s strikingly believable that by 2027, fashions will doubtless be in a build to discontinuance the work of an AI researcher/engineer. That doesn’t require believing in sci-fi; it right requires believing in straight lines on a graph.

On this part, I will merely “count the OOMs” (OOM=repeat of magnitude, 10x=1 repeat of magnitude): see on the trends in 1) compute2) algorithmic efficiencies (algorithmic growth that we can deem of as rising “efficient compute”), and 3) ”unhobbling” gains (fixing evident methods whereby fashions are hobbled by default, unlocking latent capabilities and giving them instruments, resulting in step-adjustments in usefulness). We hint the growth in every over four years sooner than GPT-4, and what we also can merely restful quiz within the four years after, thru the discontinuance of 2027. Given deep learning’s consistent enhancements for every OOM of efficient compute, we can advise this to challenge future growth.

Publicly, issues were easy for a year since the GPT-4 free up, because the next generation of fashions has been within the oven—leading some to proclaim stagnation and that deep learning is hitting a wall.1 Nevertheless by counting the OOMs, we derive a watch at what we also can merely restful the truth is quiz.

The upshot is pretty straightforward. GPT-2 to GPT-4—from fashions that were impressive for customarily managing to string collectively about a coherent sentences, to fashions that ace high-college exams—changed into no longer a one-time appreciate. We’re racing thru the OOMs extraordinarily fast, and the numbers elaborate we also can merely restful quiz yet any other ~100,000x efficient compute scaleup—resulting in yet any other GPT-2-to-GPT-4-sized qualitative soar—over four years. Furthermore, and seriously, that doesn’t right indicate a smarter chatbot; picking the many evident low-hanging fruit on “unhobbling” gains also can merely restful contrivance conclude us from chatbots to agents, from a tool to something that appears to be like more appreciate tumble-in some distance-off worker replacements.

While the inference is straightforward, the implication is hanging. One other soar appreciate that totally may perhaps well perhaps well contrivance conclude us to AGI, to fashions as neat as PhDs or consultants that will well perhaps work beside us as coworkers. Probably most importantly, if these AI programs may perhaps well perhaps well automate AI learn itself, that would contrivance in poke intense feedback loops—the realm of the subsequent part within the series.

Even now, barely someone is pricing all this in. Nevertheless situational awareness on AI isn’t the truth is that laborious, whenever you step aid and see on the trends. Ought to you defend being surprised by AI capabilities, right originate counting the OOMs.

The final four years

We have machines now that we can basically consult with appreciate humans. It’s a excellent testomony to the human ability to alter that this looks common, that we’ve change into inured to the tear of growth. Then again it’s price stepping aid and taking a be conscious on the growth of right the final few years.

GPT-2 to GPT-4

Let me remind you of how some distance we got right here in precisely the ~4 (!) years leading as a lot as GPT-4.

GPT-2 (2019) ~ preschooler: “Wow, it goes to string collectively about a believable sentences.” A in actuality-cherry-picked instance of a semi-coherent epic about unicorns within the Andes it generated changed into extremely impressive on the time. And yet GPT-2 may perhaps well perhaps well barely count to 5 with out getting tripped up;2 when summarizing an article, it right barely outperformed selecting 3 random sentences from the article.3

Evaluating AI capabilities with human intelligence is difficult and incorrect, nonetheless I deem it’s informative to take into account the analogy right here, even though it’s extremely unhealthy. GPT-2 changed into elegant for its elaborate of language, and its ability to customarily generate a semi-cohesive paragraph, or customarily answer straightforward correct questions precisely. It’s what would were impressive for a preschooler.

GPT-3 (2020)4 ~ elementary schooler: “Wow, with right some few-shot examples it goes to discontinuance some straightforward precious tasks.” It started being cohesive over even a pair of paragraphs great more consistently, and may perhaps well perhaps well factual grammar and discontinuance some very common arithmetic. For the first time, it changed into additionally commercially precious in about a narrow methods: as an illustration, GPT-3 may perhaps well perhaps well generate straightforward reproduction for search engine advertising and advertising.

All all over again, the comparison is unhealthy, nonetheless what impressed other folks about GPT-3 is per chance what would were impressive for an elementary schooler: it wrote some common poetry, may perhaps well perhaps well elaborate richer and coherent stories, may perhaps well perhaps well originate as a lot as discontinuance rudimentary coding, may perhaps well perhaps well pretty reliably learn from straightforward instructions and demonstrations, and so on.

GPT-4 (2023) ~ neat high schooler: “Wow, it goes to write down pretty subtle code and iteratively debug, it goes to write down intelligently and sophisticatedly about hard topics, it goes to motive thru hard high-college competition math, it’s beating the gigantic majority of high schoolers on whatever exams we can give it, etc.” From code to math to Fermi estimates, it goes to deem and motive. GPT-4 is now precious in my each day tasks, from serving to write down code to revising drafts.

On all the issues from AP exams to the SAT, GPT-4 rankings better than the gigantic majority of high schoolers.

Keep in mind that, even GPT-4 remains to be seriously uneven; for some tasks it’s great better than neat high-schoolers, while there are varied tasks it goes to’t yet discontinuance. That acknowledged, I tend to deem these forms of barriers arrive down to evident methods fashions are restful hobbled, as I’ll discuss in-depth later. The raw intelligence is (mostly) there, even though the fashions are restful artificially constrained; it’ll contrivance conclude extra work to release fashions being in a build to totally apply that raw intelligence all the blueprint in which thru gains.

The trends in deep learning

The tear of deep learning growth within the final decade has merely been unheard of. A mere decade ago it changed into modern for a deep learning system to title straightforward photos. Nowadays, we defend attempting to arrive aid up with new, ever more difficult exams, and yet every unique benchmark is right away cracked. It frail to contrivance conclude decades to crack extensively-frail benchmarks; now it feels appreciate mere months.

We’re actually working out of benchmarks. As an epic, my friends Dan and Collin made a benchmark called MMLU about a years ago, in 2020. They hoped to within the halt plot a benchmark that would stand the take a look at of time, identical to the total hardest exams we give highschool and school students. Fair three years later, it’s basically solved: fashions appreciate GPT-4 and Gemini derive ~90%.

Extra broadly, GPT-4 mostly cracks the total common highschool and school aptitude exams.5 (And even the one year from GPT-3.5 to GPT-4 customarily took us from well below median human performance to the discontinuance of the human differ.)

Or take into account the MATH benchmarka contrivance of hard arithmetic considerations from high-college math competitions.6 When the benchmark changed into released in 2021, the finest fashions easiest got ~5% of considerations right. And the distinctive paper notorious: “Furthermore, we discover that merely growing budgets and model parameter counts will doubtless be impractical for achieving solid mathematical reasoning if scaling trends continue […]. To agree with more traction on mathematical convey fixing we are capable of doubtless want unique algorithmic traits from the broader learn neighborhood”—we may perhaps well perhaps want elementary unique breakthroughs to resolve MATH, or so that they thought. A look of ML researchers predicted minimal growth over the arrival years;7 and yet within right a year (by mid-2022), the finest fashions went from ~5% to 50% accuracy; now, MATH is de facto solvedwith recent performance over 90%.

Repeatedly again, year after year, skeptics agree with claimed “deep learning won’t be in a build to discontinuance X” and were mercurial confirmed contaminated.8If there’s one lesson we’ve realized from the past decade of AI, it’s that you may perhaps well perhaps well per chance also merely restful never wager against deep learning.

Now the hardest unsolved benchmarks are exams appreciate GPQAa contrivance of PhD-level biology, chemistry, and physics questions. A lot of the questions read appreciate gibberish to me, and even PhDs in varied scientific fields spending 30+ minutes with Google barely score above random probability. Claude 3 Opus for the time being gets ~60%,9 when put next to in-domain PhDs who derive ~80%—and I quiz this benchmark to tumble to boot, within the next generation or two.

How did this happen? The magic of deep learning is that it right works—and the trendlines were astonishingly consistent, no topic naysayers at every turn.

With every OOM of efficient compute, fashions predictably, reliably enhance.10 If we can count the OOMs, we can (roughly, qualitatively) extrapolate ability enhancements.11 That’s how about a prescient other folks noticed GPT-4 coming.

We can decompose the growth within the four years from GPT-2 to GPT-4 into three classes of scaleups:

- Compute: We’re the usage of great bigger computers to prepare these fashions.

- Algorithmic efficiencies: There’s a continuous development of algorithmic growth. Many of these act as “compute multipliers,” and we can set up them on a unified scale of rising efficient compute.

- ”Unhobbling” gains: By default, fashions learn masses of impossible raw capabilities, nonetheless they are hobbled in every fashion of dull methods, limiting their purposeful fee. With straightforward algorithmic enhancements appreciate reinforcement learning from human feedback (RLHF), chain-of-thought (CoT), instruments, and scaffolding, we can release necessary latent capabilities.

We can “count the OOMs” of enchancment alongside these axes: that is, hint the scaleup for every in objects of efficient compute. 3x is 0.5 OOMs; 10x is 1 OOM; 30x is 1.5 OOMs; 100x is 2 OOMs; and so on. We can additionally see at what we also can merely restful quiz on high of GPT-4, from 2023 to 2027.

I’ll battle thru every-by-one, nonetheless the upshot is apparent: we’re fast racing thru the OOMs. There are possible headwinds within the solutions wall, which I’ll take care of—nonetheless total, it looks doubtless that we also can merely restful quiz yet any other GPT-2-to-GPT-4-sized soar, on high of GPT-4, by 2027.

Compute

I’ll originate with the most customarily-talked about driver of as a lot as the moment growth: throwing (loads) more compute at fashions.

Many of us judge that this is merely as a result of Moore’s Legislation. Nevertheless even within the former days when Moore’s Legislation changed into in its heyday, it changed into comparatively glacial—per chance 1-1.5 OOMs per decade. We’re seeing great more quickly scaleups in compute—conclude to 5x the rate of Moore’s law—as an alternate as a result of gigantic funding. (Spending even a million greenbacks on a single model frail to be an unhealthy thought no one would entertain, and now that’s pocket replace!)

| Model | Estimated compute | Enhance |

| GPT-2 (2019) | ~4e21 FLOP | |

| GPT-3 (2020) | ~3e23 FLOP | + ~2 Uncles |

| GPT-4 (2023) | 8e24 to 4e25 FLOP | + ~1.5–2 OOMs |

We can advise public estimates from Epoch AI (a offer extensively respected for its comely prognosis of AI trends) to hint the compute scaleup from 2019 to 2023. GPT-2 to GPT-3 changed into a hasty scaleup; there changed into a blinding overhang of compute, scaling from a smaller experiment to the usage of a total datacenter to prepare a blinding language model. With the scaleup from GPT-3 to GPT-4, we transitioned to the as a lot as the moment regime: having to fabricate an fully unique (great bigger) cluster for the next model. And yet the dramatic growth persevered. Overall, Epoch AI estimates imply that GPT-4 working in direction of frail ~3,000x-10,000x more raw compute than GPT-2.

In astronomical strokes, this is correct the continuation of a longer-working development. For the final decade and a half, primarily as a result of astronomical scaleups in funding (and specializing chips for AI workloads within the derive of GPUs and TPUs), the working in direction of compute frail for frontier AI programs has grown at roughly ~0.5 OOMs/year.

The compute scaleup from GPT-2 to GPT-3 in a year changed into an odd overhang, nonetheless the total indications are that the longer-flee development will continue. The SF-rumor-mill is abreast with dramatic tales of astronomical GPU orders. The investments eager will doubtless be unheard of—nonetheless they are in poke. I am going into this more later within the series, in IIIa. Racing to the Trillion-Greenback Cluster; in retaining with that prognosis, a extra 2 OOMs of compute (a cluster within the $10s of billions) looks very doubtless to happen by the discontinuance of 2027; even a cluster nearer to +3 OOMs of compute ($100 billion+) looks believable (and is rumored to be within the works at Microsoft/OpenAI).

Algorithmic efficiencies

While large investments in compute derive the total consideration, algorithmic growth also can very well be a equally necessary driver of growth (and has been dramatically underrated).

To see right how huge of a deal algorithmic growth also can also be, take into account the next illustration of the tumble in tag to attain ~50% accuracy on the MATH benchmark (highschool competition math) over right two years. (For comparison, a laptop science PhD pupil who didn’t namely appreciate math scored 40%, so this is already comparatively factual.) Inference efficiency improved by nearly 3 OOMs—1,000x—in no longer as a lot as 2 years.

Though these are numbers right for inference efficiency (that will well perhaps even merely or also can merely no longer correspond to working in direction of efficiency enhancements, where numbers are more difficult to infer from public data), they plot certain there may perhaps be an broad quantity of algorithmic growth that you may perhaps well perhaps well per chance also factor in and going down.

On this part, I’ll separate out two forms of algorithmic growth. Here, I’ll originate by covering “within-paradigm” algorithmic enhancements—these that merely consequence in better nefarious fashions, and that straightforwardly act as compute efficiencies or compute multipliers. Shall we embrace, a smarter algorithm also can allow us to total the the same performance nonetheless with 10x less working in direction of compute. In turn, that would act as a 10x (1 OOM) enlarge in efficient compute. (Later, I’ll duvet “unhobbling,” which you may perhaps well perhaps well per chance also deem of as “paradigm-expanding/application-expanding” algorithmic growth that unlocks capabilities of nefarious fashions.)

If we step aid and see on the lengthy-term trends, we appear to receive unique algorithmic enhancements at a pretty consistent rate. Particular particular person discoveries appear random, and at every turn, there appear insurmountable boundaries—nonetheless the lengthy-flee trendline is predictable, a straight line on a graph. Belief the trendline.

We have the finest data for ImageNet (where algorithmic learn has been mostly public and we’ve data stretching aid a decade), for which we’ve consistently improved compute efficiency by roughly ~0.5 OOMs/year all the blueprint in which thru the 9-year interval between 2012 and 2021.

That’s a astronomical deal: which ability 4 years later, we can discontinuance the the same performance for ~100x less compute (and concomitantly, great greater performance for the the same compute!).

Sadly, since labs don’t submit internal data on this, it’s more difficult to measure algorithmic growth for frontier LLMs over the final four years. EpochAI has unique work replicating their results on ImageNet for language modeling, and estimate a identical ~0.5 OOMs/year of algorithmic efficiency development in LLMs from 2012 to 2023. (This has wider error bars even though, and doesn’t seize some more recent gains, since the leading labs agree with stopped publishing their algorithmic efficiencies.)

Extra straight taking a be conscious on the final 4 years, GPT-2 to GPT-3 changed into basically a straightforward scaleup (in retaining with the paper), nonetheless there were many publicly-identified and publicly-inferable gains since GPT-3:

- We can infer gains from API costs:13

- GPT-4, on free up, fee ~the the same as GPT-3 when it changed into released, no topic the certainly broad performance enlarge.14 (If we discontinuance a naive and oversimplified aid-of-the-envelope estimate in retaining with scaling approved pointers, this suggests that perhaps roughly half the efficient compute enlarge from GPT-3 to GPT-4 got right here from algorithmi c enhancements.15)

- For the rationale that GPT-4 free up a year ago, OpenAI costs for GPT-4-level fashions agree with fallen yet any other 6x/4x (input/output) with the free up of GPT-4o.

- Gemini 1.5 Flash, recently released, affords between “GPT-3.75-level” and GPT-4-level performance,16 while costing 85x/57x (input/output) no longer as a lot as the distinctive GPT-4 (unheard of gains!).

- Chinchilla scaling approved pointers give a 3x+ (0.5 OOMs+) efficiency appreciate.17

- Gemini 1.5 Legit claimed fundamental compute efficiency gains (outperforming Gemini 1.0 Ultra, while the usage of “tremendously less” compute), with Mixture of Experts (MoE) as a highlighted architecture replace. Rather about a papers additionally convey a in actuality intensive a pair of on compute from MoE.

- There were many tweaks and gains on architecture, data, working in direction of stack, etc., the total time.18

Attach collectively, public data suggests that the GPT-2 to GPT-4 soar integrated 1-2 OOMs of algorithmic efficiency gains.19

Over the 4 years following GPT-4, we also can merely restful quiz the event to continue:20 on moderate 0.5 OOMs/year of compute efficiency, i.e. ~2 OOMs of gains when put next to GPT-4 by 2027. While compute efficiencies will change into more difficult to receive as we opt the low-hanging fruit, AI lab investments in money and skills to receive unique algorithmic enhancements are rising fast.21 (The publicly-inferable inference fee efficiencies, no no longer as a lot as, don’t appear to agree with slowed down in any appreciate.) On the high discontinuance, lets even survey more elementary, Transformer-appreciate breakthroughs22with even bigger gains.

Attach collectively, this suggests we also can merely restful quiz something appreciate 1-3 OOMs of algorithmic efficiency gains (when put next to GPT-4) by the discontinuance of 2027, per chance with a easiest wager of ~2 OOMs.

The details wall

There may perhaps be a per chance necessary offer of variance for all of this: we’re working out of data superhighway data. That will well perhaps well indicate that, very soon, the naive manner to pretraining elevated language fashions on more scraped data may perhaps well perhaps well originate hitting excessive bottlenecks.

Frontier fashions are already knowledgeable on great of the solutions superhighway. Llama 3, as an illustration, changed into knowledgeable on over 15T tokens. Commonplace Creep, a dump of great of the solutions superhighway frail for LLM working in direction ofis>100T tokens raw, even though great of that is spam and duplication (e.g., a barely straightforward deduplication results in 30T tokens, implying Llama 3 would already be the usage of basically the total data). Furthermore, for more particular domains appreciate code, there are masses of fewer tokens restful, e.g. public github repos are estimated to be in low trillions of tokens.

You furthermore mght can chase seriously extra by repeating data, nonetheless academic work on this suggests that repetition easiest gets you to this level, finding that after 16 epochs (a 16-fold repetition), returns diminish extraordinarily hasty to nil. At some level, even with more (efficient) compute, making your fashions better can change into great more difficult as a result of the solutions constraint. This isn’t to be understated: we’ve been riding the scaling curves, riding the wave of the language-modeling-pretraining-paradigm, and with out something unique right here, this paradigm will (no no longer as a lot as naively) flee out. No topic the massive investments, we’d plateau.The complete labs are rumored to be making large learn bets on unique algorithmic enhancements or approaches to derive spherical this. Researchers are purportedly attempting many strategies, from artificial data to self-play and RL approaches. Enterprise insiders seem like very bullish: Dario Amodei (CEO of Anthropic) recently acknowledged on a podcast: “whenever you happen to see at it very naively we’re no longer that removed from working out of data […] My wager is that this can no longer be a blocker […] There’s right many numerous methods to discontinuance it.” Keep in mind that, any learn results on this are proprietary and no longer being printed for the time being.

Besides to to insider bullishness, I deem there’s a solid intuitive case for why it goes to be that you may perhaps well perhaps well per chance also factor in to receive methods to prepare fashions with great better sample efficiency (algorithmic enhancements that let them learn more from dinky data). Attach in mind how you or I would learn from a terribly dense math textbook:

- What a latest LLM does for the length of working in direction of is, the truth is, very very mercurial waft the textbook, the words right flying byno longer spending great mind energy on it.

- Rather, whenever you or I read that math textbook, we read a pair pages slowly; then agree with an internal monologue relating to the cloth in our heads and discuss it with about a survey-friends; read yet any other page or two; then strive some be conscious considerations, fail, strive them again in a certain manner, derive some feedback on these considerations, strive again until we derive a convey right; and so on, until at final the cloth “clicks.”

- You or I additionally wouldn’t learn great in any appreciate from a chase thru a dense math textbook if all lets discontinuance changed into stride thru it appreciate LLMs.23

- Nevertheless per chance, then, there are methods to incorporate aspects of how humans would digest a dense math textbook to let the fashions learn great more from dinky data. In a simplified sense, this vogue of ingredient—having an internal monologue about cloth, having a dialogue with a survey-buddy, attempting and failing at considerations until it clicks—is what many artificial data/self-play/RL approaches strive and discontinuance.24

The former disclose of the art of working in direction of fashions changed into straightforward and naive, nonetheless it absolutely labored, so no one in actuality tried laborious to crack these approaches to sample efficiency. Now that it goes to also merely change into more of a constraint, we also can merely restful quiz the total labs to invest billions of bucks and their smartest minds into cracking it. A typical sample in deep learning is that it takes loads of effort (and a glorious deal of failed initiatives) to derive the details right, nonetheless at final some model of the evident and easy ingredient right works. Given how deep learning has managed to smash thru every supposed wall over the final decade, my nefarious case is that this also can also be identical right here.

Furthermore, it the truth is looks that you may perhaps well perhaps well per chance also factor in that cracking the sort of algorithmic bets appreciate artificial data may perhaps well perhaps well dramatically toughen fashions. Here’s an intuition pump. Present frontier fashions appreciate Llama 3 are knowledgeable on the solutions superhighway—and the solutions superhighway is mostly crap, appreciate e-commerce or search engine advertising or whatever. Many LLMs employ the gigantic majority of their working in direction of compute on this crap, moderately than on in actuality high quality data (e.g. reasoning chains of alternative folks working thru hard science considerations). Imagine whenever you happen to may perhaps well perhaps well employ GPT-4-level compute on fully extraordinarily high quality data—it’s on the total a terrific, great more capable model.

A see aid at AlphaGo—the first AI system that beat the realm champions on the game of Shuffle, decades sooner than it changed into thought that you may perhaps well perhaps well per chance also factor in—is precious right here to boot.25

- In step 1, AlphaGo changed into knowledgeable by imitation learning on knowledgeable human Shuffle video games. This gave it a foundation.

- In step 2, AlphaGo played tens of millions of video games against itself. This let it change into superhuman at Shuffle: take into account the notorious switch 37 within the game against Lee Sedol, an awfully odd nonetheless honest right switch a human would never agree with played.

Rising the identical of step 2 for LLMs is a key learn convey for overcoming the solutions wall (and, moreover, will within the halt be the fundamental to surpassing human-level intelligence).

All of this is to enlighten that data constraints appear to inject stunning error bars both manner into forecasting the arrival years of AI growth. There’s a in actuality precise probability issues stall out (LLMs also can restful be as huge of a deal because the solutions superhighway, nonetheless we wouldn’t derive to the truth is loopy AGI). Nevertheless I deem it’s life like to wager that the labs will crack it, and that doing so will no longer right defend the scaling curves going, nonetheless per chance allow astronomical gains in model ability.

As an aside, this additionally ability that we also can merely restful quiz more variance between the numerous labs in coming years when put next to on the modern time. Up until recently, the disclose of the art methods were printed, so each person changed into basically doing the the same ingredient. (And unique upstarts or commence offer initiatives may perhaps well perhaps well with out misfortune compete with the frontier, since the recipe changed into printed.) Now, key algorithmic solutions are changing into an increasing number of proprietary. I’d quiz labs’ approaches to diverge great more, and a few to plot sooner growth than others—even a lab that looks on the frontier now may perhaps well perhaps well derive caught on the solutions wall while others plot a step forward that permits them to flee ahead. And commence offer can agree with a terrific more difficult time competing. It would absolutely plot issues entertaining. (And if and when a lab figures it out, their step forward may perhaps well perhaps well be the fundamental to AGI, key to superintelligence—one of many United States’ most prized secrets.)

Unhobbling

Indirectly, the hardest to quantify—nonetheless no less necessary—category of enhancements: what I’ll name “unhobbling.”

Imagine if when requested to resolve a laborious math convey, you had to straight answer with the very first ingredient that got right here to mind. It looks evident that you may perhaps well perhaps well per chance agree with a laborious time, excluding for the finest considerations. Nevertheless until recently, that’s how we had LLMs resolve math considerations. As an alternate, most of us work thru the convey step-by-step on a scratchpad, and are in a build to resolve great more hard considerations that manner. “Chain-of-thought” prompting unlocked that for LLMs. No topic comely raw capabilities, they were great worse at math than they would be because they were hobbled in an evident manner, and it took a little algorithmic tweak to release great greater capabilities.

We’ve made astronomical strides in “unhobbling” fashions over the past few years. These are algorithmic enhancements past right working in direction of better nefarious fashions—and customarily easiest advise a allotment of pretraining compute—that unleash model capabilities:

- Reinforcement learning from human feedback (RLHF). Putrid fashions agree with implausible latent capabilities,26 nonetheless they’re raw and extremely laborious to work with. While th e favored thought of RLHF is that it merely censors grunt words, RLHF has been key to making fashions the truth is precious and commercially precious (moderately than making fashions predict random data superhighway textual relate material, derive them to in actuality apply their capabilities to strive and answer your inquire!). This changed into the magic of ChatGPT—well-executed RLHF made fashions usable and precious to precise other folks for the first time. The fashioned InstructGPT paper has a huge quantification of this: an RLHF’d little model changed into identical to a non-RLHF’d>100x elevated model when it involves human rater desire.

- Chain of Belief (CoT). As talked about. CoT started being extensively frail right 2 years ago and can present the identical of a>10x efficient compute enlarge on math/reasoning considerations.

- Scaffolding. Imagine CoT++: moderately than right asking a model to resolve a convey, agree with one model plot a conception of assault, agree with yet any other propose a bunch of that you may perhaps well perhaps well per chance also factor in alternate choices, agree with yet any other critique it, and so on. For instanceon HumanEval (coding considerations), straightforward scaffolding enables GPT-3.5 to outperform un-scaffolded GPT-4. On SWE-Bench (a benchmark of fixing precise-world plot engineering tasks), GPT-4 can easiest resolve ~2% precisely, while with Devin’s agent scaffolding it jumps to 14-23%. (Unlocking company is easiest in its infancy even though, as I’ll discuss more later.)

- Tools: Imagine if humans weren’t allowed to make advise of calculators or computers. We’re easiest on the starting right here, nonetheless ChatGPT can now advise a web browser, flee some code, and so on.

- Context dimension. Fashions agree with gone from 2k token context (GPT-3) to 32k context (GPT-4 free up) to 1M+ context (Gemini 1.5 Legit). This will be a astronomical deal. An improbable smaller nefarious model with, convey, 100k tokens of linked context can outperform a model that is a lot elevated nonetheless easiest has, convey, 4k linked tokens of context—more context is effectively a blinding compute efficiency appreciate.27 Extra in overall, context is key to unlocking many gains of these fashions: as an illustration, many coding gains require realizing stunning parts of a codebase in repeat to usefully make a contribution unique code; or, whenever you happen to’re the usage of a model to permit you to write down a doc at work, it in actuality desires the context from a total bunch linked internal medical doctors and conversations. Gemini 1.5 Legit, with its 1M+ token context, changed into even in a build to learn a brand unique language (a low-useful resource language no longer on the solutions superhighway) from scratch, right by inserting a dictionary and grammar reference materials in context!

- Posttraining enhancements. Primarily the most up-to-date GPT-4 has seriously improved when put next to the distinctive GPT-4 when released, in retaining with John Schulman as a result of posttraining enhancements that unlocked latent model ability: on reasoning evals it’s made huge gains (e.g., ~50% -> 72% on MATH, ~40% to ~50% on GPQA) and on the LMSys leaderboardit’s made nearly 100-level elo soar (identical to the variation in elo between Claude 3 Haiku and the excellent elevated Claude 3 Opus, fashions that agree with a ~50x tag distinction).

A look by Epoch AI of a majority of these methods, appreciate scaffolding, plot advise, and so on, finds that methods appreciate this can customarily consequence in efficient compute gains of 5-30x on many benchmarks. METR (a firm that evaluates fashions) equally stumbled on very stunning performance enhancements on their contrivance of agentic tasks, by process of unhobbling from the the same GPT-4 nefarious model: from 5% with right the nefarious model, to 20% with the GPT-4 as posttrained on free up, to nearly 40% on the modern time from better posttraining, instruments, and agent scaffolding.

While it’s laborious to construct these on a unified efficient compute scale with compute and algorithmic efficiencies, it’s certain these are astronomical gains, no no longer as a lot as on a roughly identical magnitude because the compute scaleup and algorithmic efficiencies. (It additionally highlights the central feature of algorithmic growth: the 0.5 OOMs/year of compute efficiencies, already necessary, are easiest allotment of the epic, and set up alongside with unhobbling algorithmic growth total is even per chance a majority of the gains on the most up-to-date development.)

“Unhobbling” is what the truth is enabled these fashions to change into precious—and I’d argue that great of what is retaining aid many industrial gains on the modern time is the necessity for extra “unhobbling” of this kind. Indeed, fashions on the modern time are restful extremely hobbled! Shall we embrace:

- They don’t agree with lengthy-term memory.

- They’ll’t advise a laptop (they restful easiest agree with very dinky instruments).

- They restful mostly don’t deem sooner than they assert. When you quiz ChatGPT to write down an essay, that’s appreciate ready for a human to write down an essay by process of their initial circulation-of-consciousness.28

- They’ll (mostly) easiest have interaction in transient aid-and-forth dialogues, moderately than going away for a day or a week, brooding a pair of convey, researching varied approaches, consulting varied humans, and then writing you a longer file or pull query of.

- They’re mostly no longer personalized to you or your application (right a generic chatbot with a fast urged, moderately than having the total linked background in your firm and your work).

The odds right here are broad, and we’re fast picking low-hanging fruit right here. This is necessary: it’s totally contaminated to right factor in “GPT-6 ChatGPT.” With persevered unhobbling growth, the enhancements will doubtless be step-adjustments when put next to GPT-6 + RLHF. By 2027, moderately than a chatbot, you’re going to agree with something that appears to be like more appreciate an agent, appreciate a coworker.

From chatbot to agent-coworker

What may perhaps well perhaps well formidable unhobbling over the arrival years see appreciate? The fashion I deem it, there are three key ingredients:

1. Fixing the “onboarding convey”

GPT-4 has the raw smarts to discontinuance a tight chunk of many other folks’s jobs, nonetheless it absolutely’s fashion of appreciate a neat unique rent that right confirmed up 5 minutes ago: it doesn’t agree with any linked context, hasn’t read the firm medical doctors or Slack historical past or had conversations with contributors of the personnel, or spent any time realizing the firm-internal codebase. A neat unique rent isn’t that precious 5 minutes after arriving—nonetheless they are comparatively precious a month in! It looks appreciate it goes to be that you may perhaps well perhaps well per chance also factor in, as an illustration by process of very-lengthy-context, to “onboard” fashions appreciate we may perhaps well perhaps a brand unique human coworker. This alone may perhaps well perhaps well be a astronomical release.

2. The take a look at-time compute overhang (reasoning/error correction/system II for longer-horizon considerations)

Gentle now, fashions can basically easiest discontinuance fast tasks: you quiz them a inquire, and as well they offer you with with an answer. Nevertheless that’s extraordinarily limiting. Most useful cognitive work humans discontinuance is longer horizon—it doesn’t right contrivance conclude 5 minutes, nonetheless hours, days, weeks, or months.

A scientist that would easiest deem a tough convey for five minutes couldn’t plot any scientific breakthroughs. A tool engineer that would easiest write skeleton code for a single feature when requested wouldn’t be very precious—plot engineers are given a elevated project, and as well they then chase plot a conception, realize linked parts of the codebase or technical instruments, write varied modules and take a look at them incrementally, debug errors, search over the contrivance of that you may perhaps well perhaps well per chance also factor in alternate choices, and at final post a blinding pull query of that’s the halt consequence of weeks of labor. And masses others.

In essence, there may perhaps be a blinding take a look at-time compute overhang. Imagine every GPT-4 token as a word of internal monologue whenever you specialise in a pair of convey. Every GPT-4 token is comparatively neat, nonetheless it absolutely can for the time being easiest in actuality effectively advise on the repeat of ~hundreds of tokens for chains of thought coherently (effectively as even though you may perhaps well perhaps well per chance easiest employ a pair of minutes of internal monologue/thinking on a convey or challenge).

What if it may perhaps well well perhaps well advise tens of millions of tokens to deem and work on in actuality laborious considerations or bigger initiatives?

| Series of tokens | Fair like me engaged on something for… | |

| 100s | A few minutes | ChatGPT (we’re right here) |

| 1000s | Half of an hour | +1 OOMs take a look at-time compute |

| 10,000s | Half of a workday | +2 Uncles |

| 100,000s | A workweek | +3 Uncles |

| Millions | Plenty of months | +4 Uncles |

Even supposing the “per-token” intelligence were the the same, it’d be the variation between a neat particular person spending a couple of minutes vs. a few months on a convey. I don’t learn about you, nonetheless there’s great, great, great more I am capable of in about a months vs. a pair of minutes. If lets release “being in a build to deem and work on something for months-identical, moderately than about a-minutes-identical” for fashions, it may perhaps well well perhaps well release an insane soar in ability. There’s a astronomical overhang right here, many OOMs price.

Gentle now, fashions can’t discontinuance this yet. Even with recent advances in lengthy-context, this longer context mostly easiest works for the consumption of tokens, no longer the production of tokens—after a while, the model goes off the rails or gets caught. It’s no longer yet in a build to leave for a while to work on a convey or challenge by itself.29

Nevertheless unlocking take a look at-time compute also can merely be a topic of barely little “unhobbling” algorithmic wins. Probably a little quantity of RL helps a model learn to error factual (“hm, that doesn’t see right, let me double take a look at that”), plot plans, search over that you may perhaps well perhaps well per chance also factor in alternate choices, and so on. In a sense, the model already has most of the raw capabilities, it right desires to learn about a extra abilities on high to construct it all collectively.

In essence, we right want to educate the model a fashion of Machine II outer loop30 that lets it motive thru hard, lengthy-horizon initiatives.

If we prevail at instructing this outer loop, moderately than a fast chatbot answer of a pair paragraphs, factor in a circulation of tens of millions of words (coming in more mercurial than you may perhaps well perhaps well per chance also read them) because the model thinks thru considerations, makes advise of instruments, tries varied approaches, does learn, revises its work, coordinates with others, and completes huge initiatives by itself.

In varied domains, appreciate AI programs for board video games, it’s been demonstrated that you may perhaps well perhaps well per chance also advise more take a look at-time compute (additionally identified as inference-time compute) to replace for working in direction of compute.

If a identical relationship held in our case, if lets release + 4 OOMs of take a look at-time compute, that will doubtless be identical to + 3OOMs of pretraining compute, i.e. very roughly something appreciate the soar between GPT-3 and GPT-4. (I.e., fixing this “unhobbling” may perhaps well perhaps well be identical to a astronomical OOM scaleup.)

3. The usage of a laptop

This is per chance the most easy of the three. ChatGPT today may perhaps well perhaps well be de facto appreciate a human that sits in an isolated field that you may perhaps well perhaps well per chance also textual relate material. While early unhobbling enhancements instruct fashions to make advise of particular particular person isolated instruments, I quiz that with multimodal fashions we are capable of soon be in a build to discontinuance this in one fell swoop: we are capable of merely allow fashions to make advise of a laptop appreciate a human would.

That ability becoming a member of your Zoom calls, researching issues on-line, messaging and emailing other folks, learning shared medical doctors, the usage of your apps and dev tooling, and so on. (Keep in mind that, for fashions to plot the most advise of this in longer-horizon loops, this can chase hand-in-hand with unlocking take a look at-time compute.)

By the discontinuance of this, I quiz us to derive something that appears to be like loads appreciate a tumble-in some distance-off worker. An agent that joins your firm, is onboarded appreciate a brand unique human rent, messages you and colleagues on Slack and makes advise of your softwares, makes pull requests, and that, given huge initiatives, can discontinuance the model-identical of a human going away for weeks to independently total the challenge. You’ll per chance want seriously better nefarious fashions than GPT-4 to release this, nonetheless per chance no longer even that great better—masses of juice is in fixing the certain and common methods fashions are restful hobbled.

By the fashion, I quiz the centrality of unhobbling to consequence in a seriously entertaining “sonic yell” discontinuance when it involves industrial gains. Intermediate fashions between now and the tumble-in some distance-off worker would require masses of schlep to change workflows and manufacture infrastructure to mix and earn economic fee from. The tumble-in some distance-off worker will doubtless be dramatically more straightforward to mix—right, well, tumble them in to automate the total jobs that would be executed remotely. It looks believable that the schlep will contrivance conclude longer than the unhobbling, that is, by the time the tumble-in some distance-off worker is in a build to automate a blinding series of jobs, intermediate fashions won’t yet were fully harnessed and integrated—so the soar in economic fee generated would be seriously discontinuous.

The next four years

Striking the numbers collectively, we also can merely restful (roughly) quiz yet any other GPT-2-to-GPT-4-sized soar within the 4 years following GPT-4, by the discontinuance of 2027.

- GPT-2 to GPT-4 changed into roughly a 4.5–6 OOM nefarious efficient compute scaleup (physical compute and algorithmic efficiencies), plus fundamental “unhobbling” gains (from nefarious model to chatbot).

- In the next 4 years, we also can merely restful quiz 3–6 OOMs of nefarious efficient compute scaleup (physical compute and algorithmic efficiencies)—with per chance a easiest wager of ~5 OOMs—plus step-adjustments in utility and gains unlocked by “unhobbling” (from chatbot to agent/tumble-in some distance-off worker).

To set up this in standpoint, narrate GPT-4 working in direction of took 3 months. In 2027, a leading AI lab will doubtless be in a build to prepare a GPT-4-level model in a minute.31 The OOM efficient compute scaleup will doubtless be dramatic.

The build will that contrivance conclude us?

GPT-2 to GPT-4 took us from ~preschooler to ~neat high-schooler; from barely being in a build to output about a cohesive sentences to acing high-college exams and being a precious coding assistant. That changed into an insane soar. If this is the intelligence hole we’ll duvet all over again, where will that contrivance conclude us?32 We also can merely restful no longer be surprised if that takes us very, very some distance. Seemingly, this can contrivance conclude us to fashions that will well perhaps outperform PhDs and the finest consultants in a field.

(One orderly manner to deem this is that the most up-to-date development of AI growth is persevering with at roughly 3x the tear of minute one development. Your 3x-flee-minute one right graduated highschool; it’ll be taking your job sooner than it!)

All all over again, seriously, don’t right factor in an extremely neat ChatGPT: unhobbling gains also can merely restful indicate that this looks more appreciate a tumble-in some distance-off worker, an extremely neat agent that will well perhaps motive and conception and error-factual and knows all the issues about you and your firm and can work on a convey independently for weeks.

We’re on course for AGI by 2027. These AI programs will basically be in a build to automate basically all cognitive jobs (deem: all jobs that would be executed remotely).

To make sure—the error bars are stunning. Progress may perhaps well perhaps well stall as we flee out of data, if the algorithmic breakthroughs wanted to smash thru the solutions wall stamp more difficult than expected. Maybe unhobbling doesn’t chase as some distance, and we’re caught with merely knowledgeable chatbots, moderately than knowledgeable coworkers. Probably the final decade-lengthy trendlines destroy, or scaling deep learning hits a wall for precise this time. (Or an algorithmic step forward, even straightforward unhobbling that unleashes the take a look at-time compute overhang, is on the total a paradigm-shift, accelerating issues extra and resulting in AGI even earlier.)

No topic all the issues, we’re racing thru the OOMs, and it requires no esoteric beliefs, merely development extrapolation of straight lines, to contrivance conclude the risk of AGI—right AGI—by 2027 extraordinarily seriously.

It looks appreciate many are within the game of downward-defining AGI for the time being, as right as in actuality factual chatbot or whatever. What I indicate is an AI system that would fully automate my or my friends’ job, that would fully discontinuance the work of an AI researcher or engineer. Probably some areas, appreciate robotics, also can contrivance conclude longer to determine by default. And the societal rollout, e.g. in medical or honest right professions, may perhaps well perhaps well with out misfortune be slowed by societal choices or law. Nevertheless once fashions can automate AI learn itself, that’s sufficient—sufficient to kick off intense feedback loops—and lets very mercurial plot extra growth, the automatic AI engineers themselves fixing the total final bottlenecks to totally automating all the issues. Particularly, tens of millions of automatic researchers may perhaps well perhaps well very plausibly compress a decade of extra algorithmic growth into a year or less. AGI will merely be a little model of the superintelligence soon to be conscious. (Extra on that within the subsequent part.)

No topic all the issues, discontinuance no longer quiz the vertiginous tear of growth to abate. The trendlines see harmless, nonetheless their implications are intense. As with every generation sooner than them, every unique generation of fashions will dumbfound most onlookers; they’ll be incredulous when, very soon, fashions resolve extremely hard science considerations that would contrivance conclude PhDs days, when they’re whizzing spherical your computer doing all your job, when they’re writing codebases with tens of millions of lines of code from scratch, when yearly or two the economic fee generated by these fashions 10xs. Omit scifi, count the OOMs: it’s what we also can merely restful quiz. AGI is no longer any longer a some distance-off myth. Scaling up straightforward deep learning methods has right labored, the fashions right want to learn, and we’re about to discontinuance yet any other 100,000x+ by the discontinuance of 2027. It won’t be lengthy sooner than they’re smarter than us.

Next put up in series:

II. From AGI to Superintelligence: the Intelligence Explosion

Addendum. Racing thru the OOMs: It’s this decade or bust

I frail to be more skeptical of fast timelines to AGI. One motive is that it looked unreasonable to privilege this decade, concentrating so great AGI-probability-mass on it (it looked appreciate a standard fallacy to deem “oh we’re so particular”). I thought we’ve to be unsure about what it takes to derive AGI, which also can merely restful consequence in a terrific more “smeared-out” probability distribution over after we also can derive AGI.

Then again, I’ve changed my mind: seriously, our uncertainty over what it takes to derive AGI ought to be over uncles (of efficient compute), moderately than over years.

We’re racing thru the OOMs this decade. Even at its bygone heyday, Moore’s law changed into easiest 1–1.5 OOMs/decade. I estimate that we are going to discontinuance ~5 OOMs in 4 years, and over ~10 this decade total.

In essence, we’re within the midst of a astronomical scaleup reaping one-time gains this decade, and growth thru the OOMs will doubtless be multiples slower thereafter. If this scaleup doesn’t derive us to AGI within the next 5-10 years, it will doubtless be a lengthy manner out.

- Spending scaleup: Spending a million greenbacks on a model frail to be unhealthy; by the discontinuance of the final decade, we are capable of doubtless agree with $100B or $1T clusters. Going great greater than that will doubtless be laborious; that’s already basically the possible limit (each and each when it involves what huge industry can afford, and even right as a allotment of GDP). Thereafter all we’ve is glacial 2%/year development precise GDP growth to enlarge this.

- Hardware gains: AI hardware has been bettering great more mercurial than Moore’s law. That’s because we’ve been specializing chips for AI workloads. Shall we embrace, we’ve gone from CPUs to GPUs; tailored chips for Transformers; and we’ve gone down to great decrease precision quantity formats, from fp64/fp32 for passe supercomputing to fp8 on H100s. These are stunning gains, nonetheless by the discontinuance of the final decade we’ll doubtless agree with totally-in actuality honest right AI-particular chips, with out great extra past-Moore’s law gains that you may perhaps well perhaps well per chance also factor in.

- Algorithmic growth: In the arrival decade, AI labs will invest tens of billions in algorithmic R&D, and the total smartest other folks within the realm will doubtless be engaged on this; from minute efficiencies to unique paradigms, we’ll be picking a total bunch the low-hanging fruit. We per chance won’t attain any fashion of laborious limit (even though “unhobblings” are doubtless finite), nonetheless no no longer as a lot as the tear of enhancements also can merely restful leisurely down, because the quickly growth (in $ and human capital investments) essentially slows down (e.g., most of the neat STEM skills will already be engaged on AI). (That acknowledged, this is the most unsure to foretell, and the availability of most of the uncertainty on the OOMs within the 2030s on the disclose above.)

Attach collectively, this implies we’re racing thru many more OOMs within the next decade than we also can in a pair of decades thereafter. Maybe it’s sufficient—and we derive AGI soon—or we are capable of be in for a lengthy, leisurely slog. You and I will moderately disagree on the median time to AGI, reckoning on how laborious we deem achieving AGI will doubtless be—nonetheless given how we’re racing thru the OOMs today, absolutely your modal AGI year also can merely restful someday later this decade or so.