Cloudflare affords one-click resolution to dam AI bots

Serving tech enthusiasts for over 25 years.

TechSpot capacity tech prognosis and advice you can have confidence.

Why it issues: There might perchance be a increasing consensus that generative AI has the possible to form the inaugurate web critical worse than it changed into once forward of. For the time being all colossal tech corporations and AI startups depend on scraping the total normal reveal material they might be able to off the on-line to prepare their AI fashions. The anguish is that an overwhelming majority of sites is just not chilly with that, nor admire they given permission for such. However hey, correct ask Microsoft AI CEO, who believes reveal material on the inaugurate web is “freeware.”

Lawful this previous week, a sage from Akamai changed into once reconfirming that bots form up an limitless amount of total web traffic, and that AI is making things critical more uncomplicated for cybercriminals and dishonest ventures.

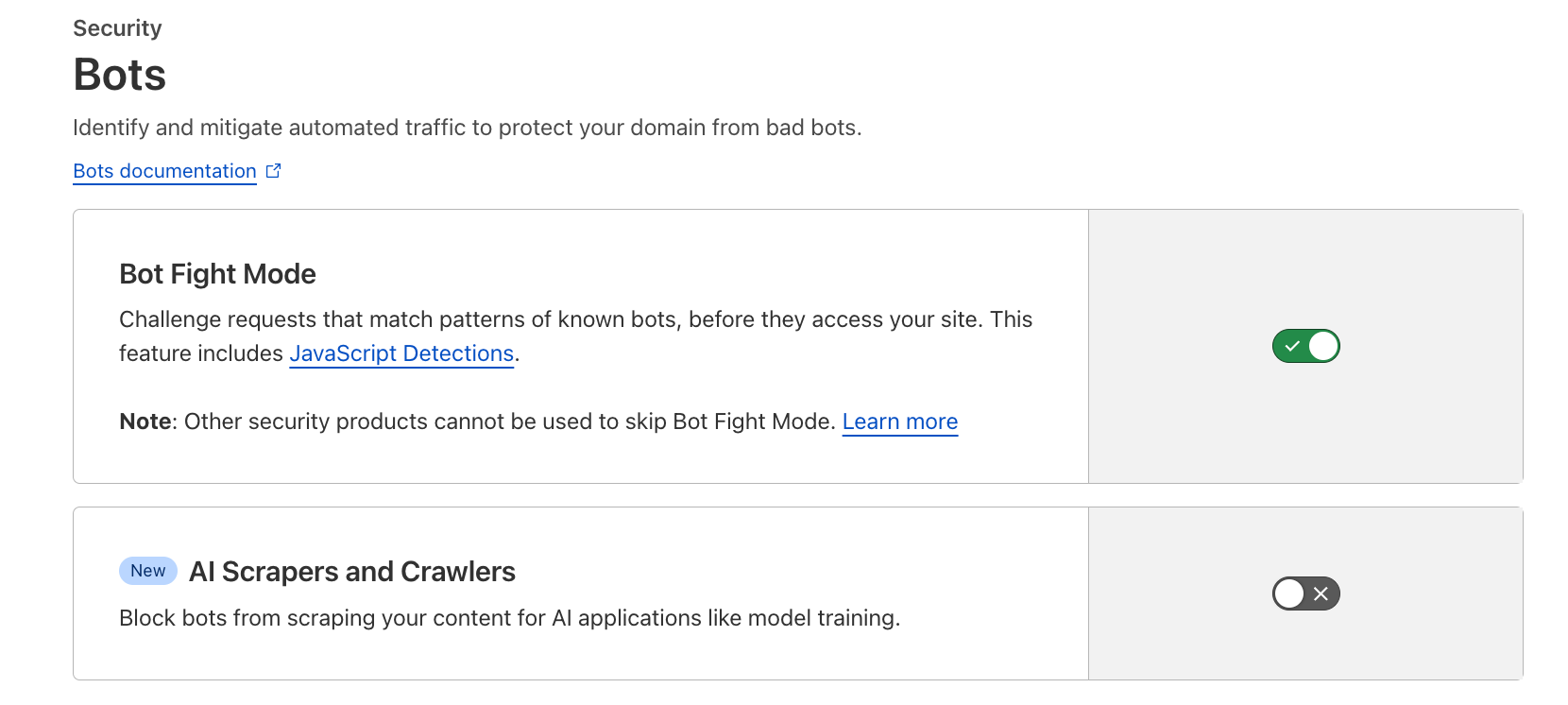

Web sites and reveal material creators the utilization of reveal material supply and firewall services provided by Cloudflare now admire a additional, easy-to-use resolution to curb Burly Tech’s capability to unleash their bots and spot online page material without express authorization.

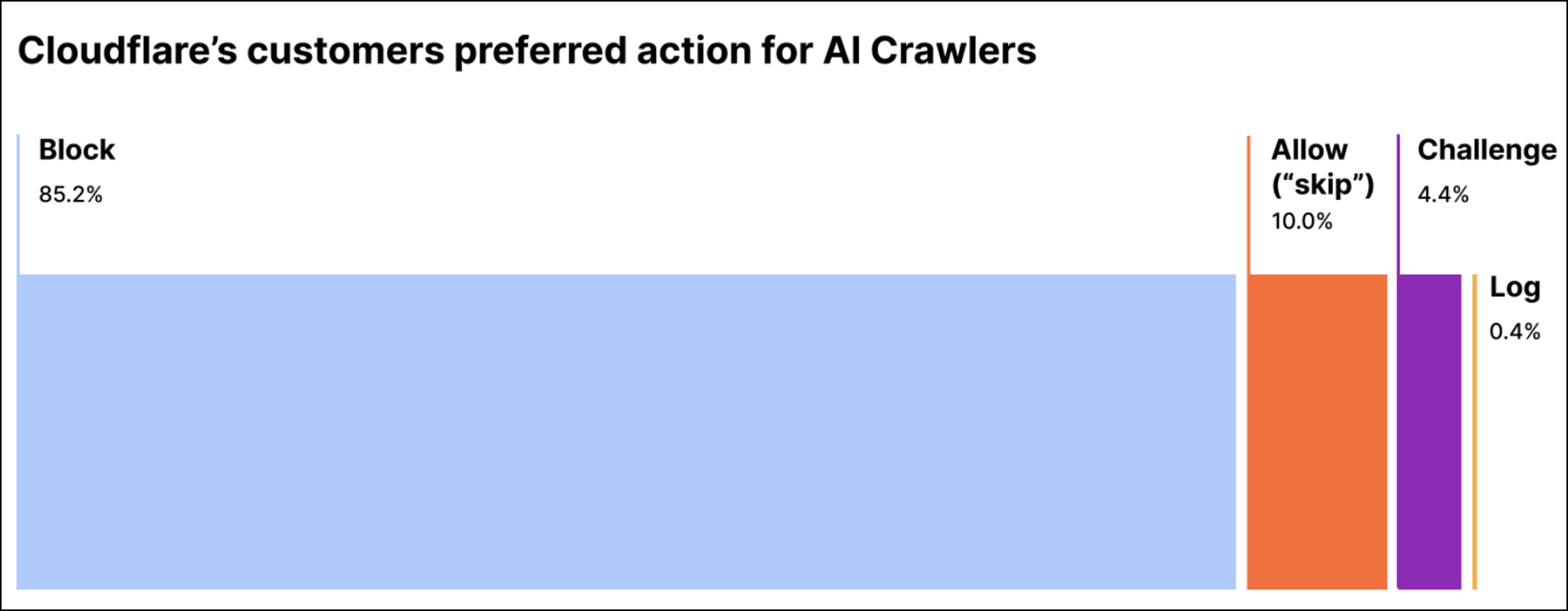

Most standard AI corporations, enjoy OpenAI, admire started to abolish a technique to dam their crawling bots by custom-made guidelines that is seemingly to be added to a robots.txt file on the server. On the bogus hand, these solutions simplest work when the bot has been designed to if fact be told note these guidelines – the anguish is that 1) now not all corporations are willing to honor robots.txt directives, and a pair of) many AI corporations admire already scrapped every thing they might be able to also forward of offering this “make a choice out” – Cloudflare says that an overwhelming majority of its customers, as critical as 85 percent, admire already opted to dam AI bots this form.

The new one-click resolution provided by Cloudflare is readily accessible to both free and paying customers, and it will seemingly set an efficient fight against AI bots that abolish now not note robots.txt guidelines. Cloudflare can title bots and make particular person fingerprints for everyone, and it vows to automatically update its fingerprint database over time.

As one in every of the largest CDN networks on the on-line, Cloudflare can extrapolate records from over 57 million network requests per second on life like.

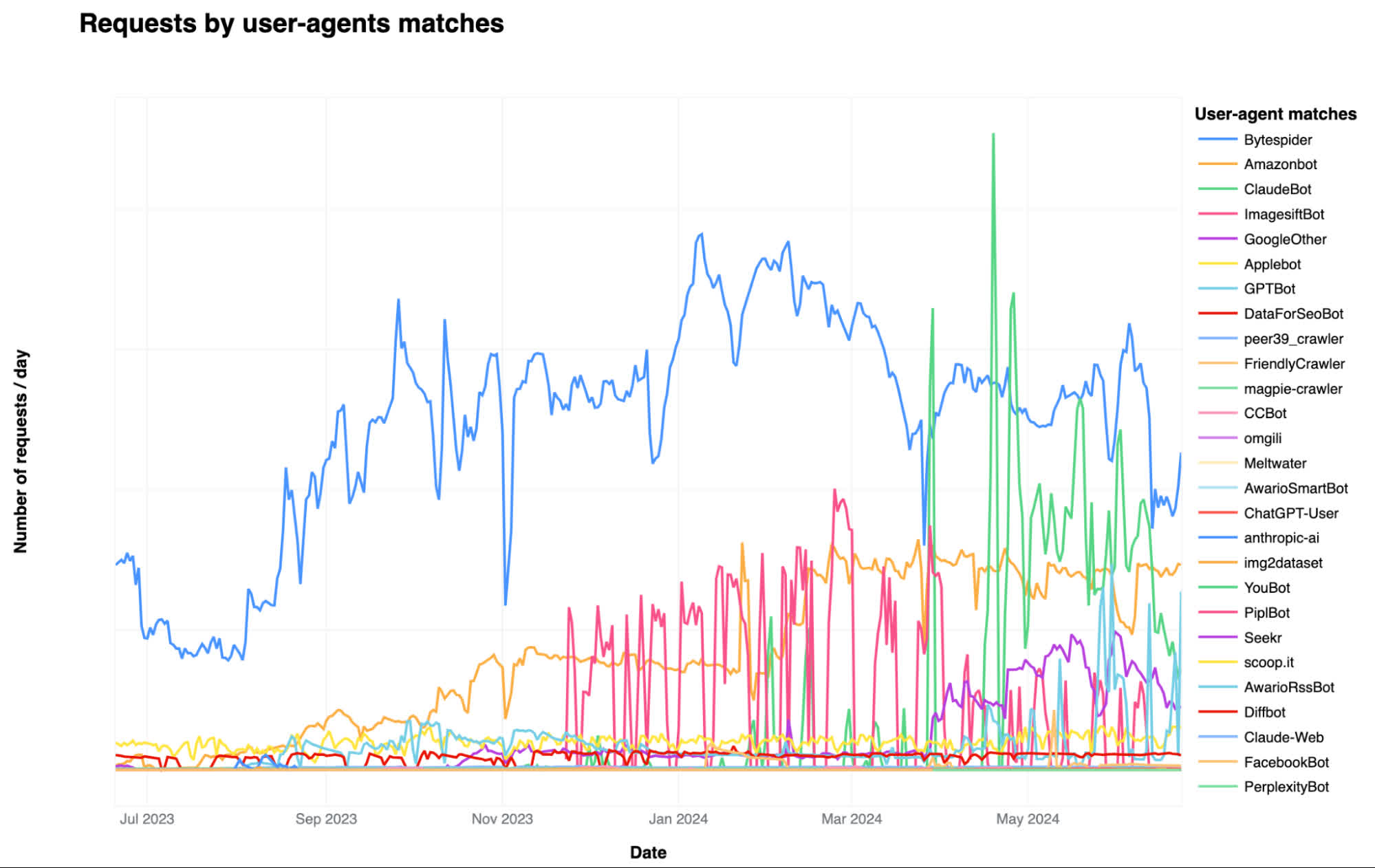

The firm set collectively a listing of essentially the most inviting AI bots pillaging on the present time’s web, with Bytespider, GPTBot, and ClaudeBot being the three largest ones by piece of sites accessed. Bytespider is operated by Chinese firm and TikTok owner ByteDance, and is seemingly the utilization of reveal material scraped from 40% of Cloudflare-stable web sites to prepare its dapper language fashions.

GPTBot is having access to 35% percent of sites and is collecting records to prepare ChatGPT and other generative AI services provided by OpenAI. ClaudeBot has now not too lengthy within the past increased its search files from volume as a lot as 11%, Cloudflare says, and is archaic to prepare the namesake family of LLM algorithms developed by Anthropic.

While these successfully-diagnosed bots desires to be more uncomplicated to title by a static prognosis effort, Cloudflare can additionally detect bots pretending to be steady other folks browsing the on-line.

The firm developed its admire global machine studying mannequin and is without a doubt the utilization of AI technology to glimpse AI bots pretending to be one thing else. Cloudflare said its mannequin changed into once able to “because it’ll be flag traffic” coming from evasive AI bots, and this can be archaic to detect new scraping instruments and spurious bots in some unspecified time in the future without wanting to generate a new bot fingerprint first.