This digicam captures 156.3 trillion frames per 2nd

Scientists have created a blazing-fleet scientific digicam that shoots pictures at an encoding payment of 156.3 terahertz (THz) to particular particular person pixels — equivalent to 156.3 trillion frames per 2nd. Dubbed SCARF (swept-coded aperture proper-time femtophotography), the analysis-grade digicam might maybe well most definitely consequence in breakthroughs in fields studying micro-events that reach and

Scientists have created a blazing-fleet scientific digicam that shoots pictures at an encoding payment of 156.3 terahertz (THz) to particular particular person pixels — equivalent to 156.3 trillion frames per 2nd. Dubbed SCARF (swept-coded aperture proper-time femtophotography), the analysis-grade digicam might maybe well most definitely consequence in breakthroughs in fields studying micro-events that reach and scuttle too fleet for at the present time’s most costly scientific sensors.

SCARF has successfully captured ultrafast events esteem absorption in a semiconductor and the demagnetization of a metal alloy. The analysis might maybe well most definitely launch unique frontiers in areas as numerous as shock wave mechanics or developing more efficient treatment.

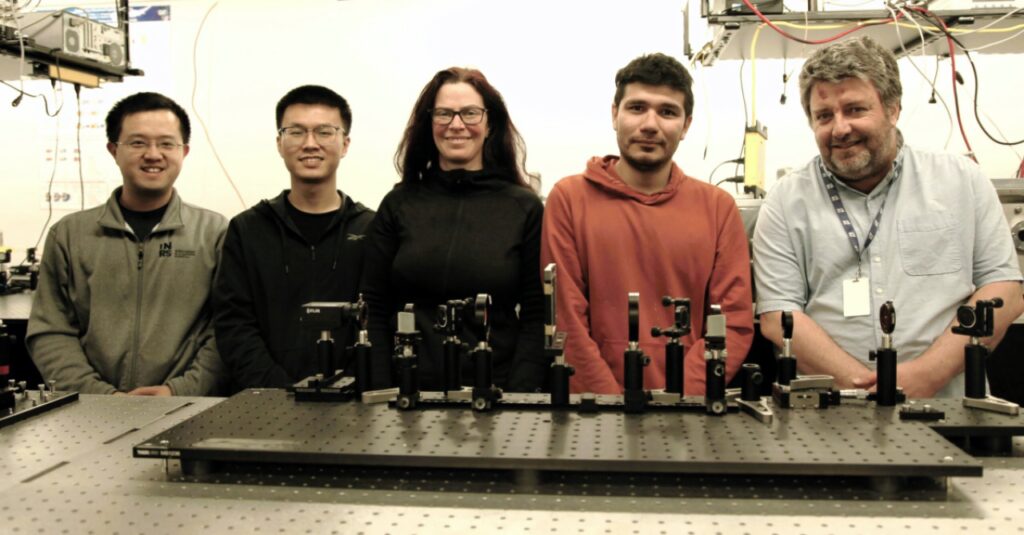

Leading the analysis team change into Professor Jinyang Liang of Canada’s Institut national de la recherche scientifique (INRS). He’s a globally identified pioneer in ultrafast pictures who built on his breakthroughs from a separate spy six years ago. The recent analysis change into published in Nature, summarized in an announcement from INRS and first reported on by Science On a daily basis.

Professor Liang and company tailored their analysis as a unique lift on ultrafast cameras. Typically, these programs exhaust a sequential system: hang frames one at a time and portion them together to ogle the objects in motion. However that system has boundaries. “For instance, phenomena akin to femtosecond laser ablation, shock-wave interaction with residing cells, and optical chaos can no longer be studied this draw,” Liang mentioned.

The unique digicam builds on Liang’s old analysis to upend oldschool ultrafast digicam logic. “SCARF overcomes these challenges,” INRS verbal change officer Julie Robert wrote in an announcement. “Its imaging modality permits ultrafast sweeping of a static coded aperture while no longer shearing the ultrafast phenomenon. This offers corpulent-sequence encoding charges of up to 156.3 THz to particular particular person pixels on a digicam with a payment-coupled scheme (CCD). These outcomes also will seemingly be bought in a single shot at tunable body charges and spatial scales in both reflection and transmission modes.”

In extraordinarily simplified phrases, which manner the digicam makes exhaust of a computational imaging modality to hang spatial recordsdata by letting light enter its sensor at rather of diversified instances. No longer having to job the spatial recordsdata for the time being is piece of what frees the digicam to hang these extraordinarily swiftly “chirped” laser pulses at up to 156.3 trillion instances per 2nd. The pictures’ uncooked recordsdata can then be processed by a pc algorithm that decodes the time-staggered inputs, remodeling every of the trillions of frames into a full describe.

Remarkably, it did so “utilizing off-the-shelf and passive optical components,” as the paper describes. The team describes SCARF as low-worth with low vitality consumption and high size quality when put next to existing ways.

Even supposing SCARF is targeted more on analysis than consumers, the team is already working with two corporations, Axis Photonique and Few-Cycle, to make industrial variations, presumably for guests at diversified elevated studying or scientific institutions.

For a more technical clarification of the digicam and its capability applications, you would view the corpulent paper in Nature.