Cloudflare blames recent outage on BGP hijacking incident

Net extensive Cloudflare reports that its DNS resolver service, 1.1.1.1, changed into no longer too long in the past unreachable or degraded for a few of its clients as a result of a aggregate of Border Gateway Protocol (BGP) hijacking and a route leak.

The incident took place closing week and affected 300 networks in 70 countries. Despite these numbers, the company says that the impact changed into “quite low” and in some countries customers didn’t even peek it.

Incident well-known aspects

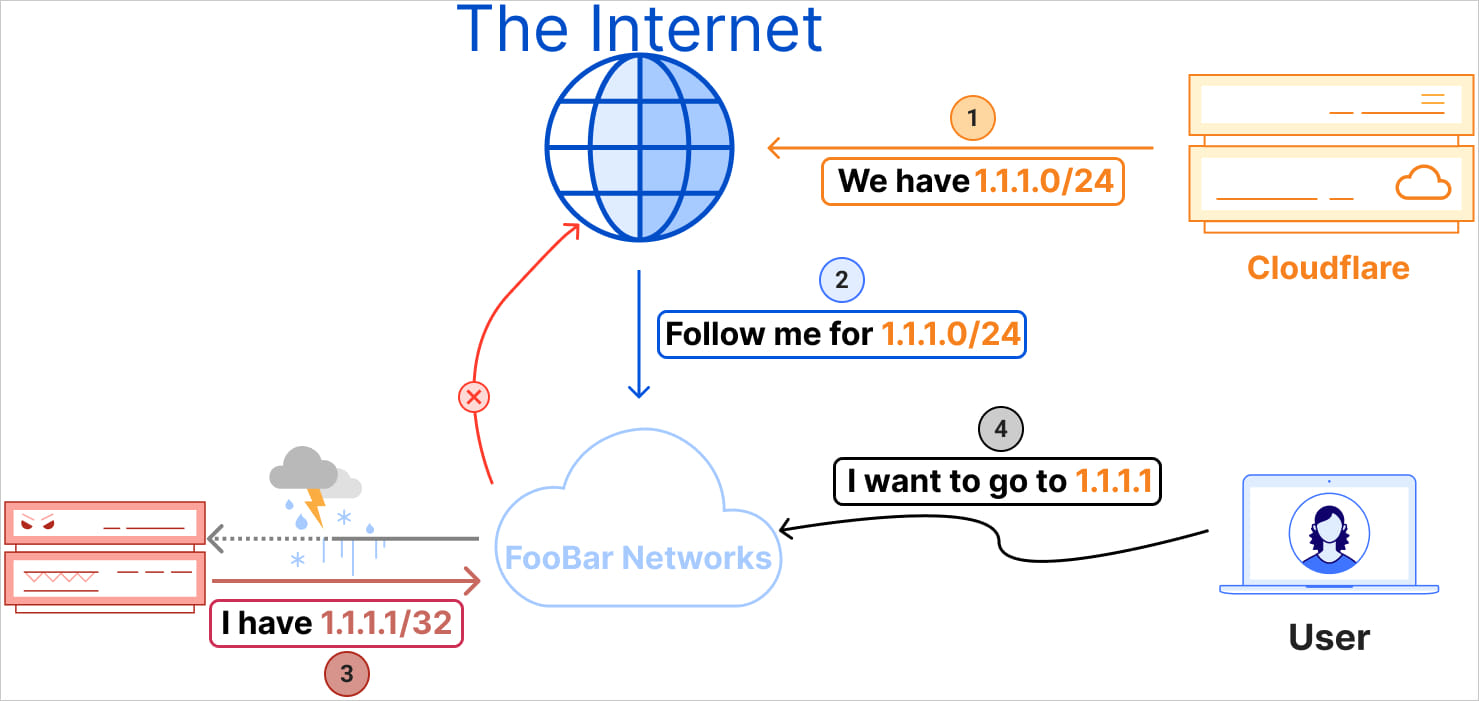

Cloudflare says that at 18:51 UTC on June 27, Eletronet S.A. (AS267613) began announcing the 1.1.1.1/32 IP contend with to its friends and upstream suppliers.

This wrong announcement changed into authorized by more than one networks, including a Tier 1 provider, which treated it as a Some distance away Caused Blackhole (RTBH) route.

The hijack took place on chronicle of BGP routing favors essentially the most particular route. AS267613’s announcement of 1.1.1.1/32 changed into more particular than Cloudflare’s 1.1.1.0/24, leading networks to incorrectly route web inform online visitors to AS267613.

As a consequence, web inform online visitors supposed for Cloudflare’s 1.1.1.1 DNS resolver changed into blackholed/rejected, and hence, the service turned unavailable for some customers.

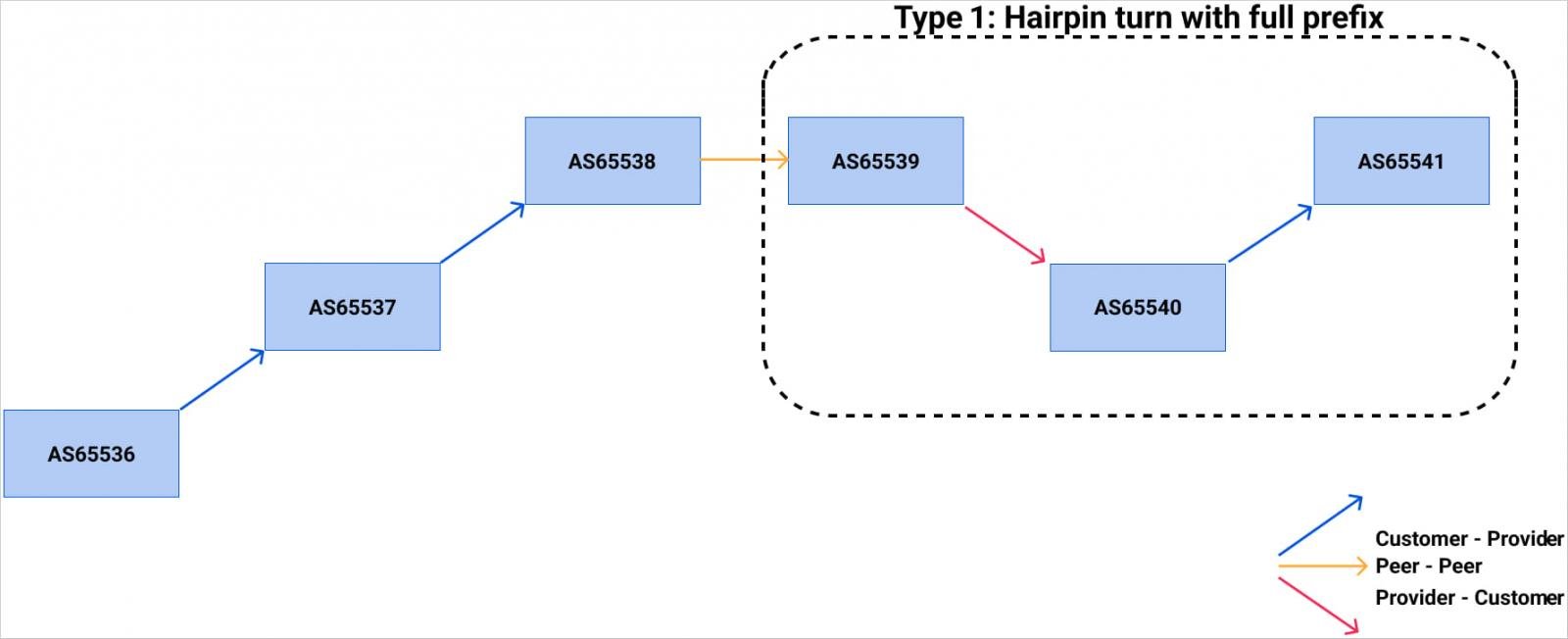

One minute later, at 18:52 UTC, Nova Rede de Telecomunicações Ltda (AS262504) erroneously leaked 1.1.1.0/24 upstream to AS1031, which propagated it further, affecting world routing.

This leak altered the popular BGP routing paths, inflicting web inform online visitors destined for 1.1.1.1 to be misrouted, compounding the hijacking situation and inflicting further reachability and latency complications.

Cloudflare identified the complications at around 20:00 UTC and resolved the hijack roughly two hours later. The route leak changed into resolved at 02:28 UTC.

Remediation effort

Cloudflare’s first line of response changed into to engage with the networks enraged by the incident whereas also disabling peering intervals with all problematic networks to mitigate the impact and forestall further propagation of wrong routes.

The corporate explains that the unsuitable announcements didn’t affect internal network routing as a result of adopting the Resource Public Key Infrastructure (RPKI), which led to routinely rejecting the invalid routes.

Long-term alternatives Cloudflare provided in its postmortem write-up include:

- Reduction route leak detection systems by incorporating more data sources and integrating right-time data aspects.

- Promote the adoption of Resource Public Key Infrastructure (RPKI) for Route Origin Validation (ROV).

- Promote the adoption of the Mutually Agreed Norms for Routing Security (MANRS) principles, which include rejecting invalid prefix lengths and implementing tough filtering mechanisms.

- Reduction networks to reject IPv4 prefixes longer than /24 in the Default-Free Zone (DFZ).

- Recommend for deploying ASPA objects (currently drafted by the IETF), that are feeble to validate the AS path in BGP announcements.

- Explore the potential of implementing RFC9234 and Discard Origin Authorization (DOA).